Most businesspeople have encountered SAP at one time or another. The legacy enterprise resource program has established itself as a dependable accounting system with a massive database, which businesses around the world have come to depend on. It’s especially popular in manufacturing, retail, and food.

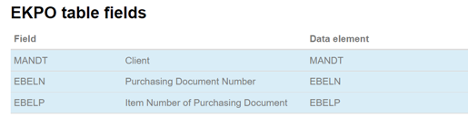

While it was the gold standard in its heyday, SAP is not without its flaws. It has always been notoriously difficult to configure; for example, as shown in the table below, many users have issues when translating SAP’s four-character table names and five-character field names into meaningful business context or metadata.

Once SAP is up and running, data analysts have everything they need in theory, but the platform was never designed for analytics or reporting. An additional subscription to SAP BW or SAP BW/4Hana is required for that. Most people in this position opt to move their SAP data to Snowflake—a move that has become essential, but not easy. Consider that entire consultancies have been built to accommodate just this one complex task.

Enter Matillion: This award-winning cloud-native ETL solution grabs data from SAP and lands it inside Snowflake to deliver reports in a consumable format. Let’s look at some tips for using Matillion to conquer your data migration.

For starters, don’t set out to “boil the ocean” by grabbing all SAP data at once. There are far too many domains to consider, from product and pricing to shipping and supply chain, requiring a proof of concept (POC) to stay on track. Scope what you want to do so you can validate what your assumptions are and what the configuration of your infrastructure is to reduce risk.

Next, define the scope of data. Do you want to look at customers and sales over the last 12 months? Maybe you’d prefer an activity for the last quarter? Whatever you choose, all parties involved must decide on the parameters as soon as possible. Aim for a development timebox of 2-4 weeks, which gives you ample opportunity for end-to-end solution building.

Once you establish parameters, you can begin processing raw data in its native format to build a data model. Users will be able to consume the data whether it’s raw or converted, resulting in a taxonomy that’s business-user-friendly.

Each domain of data will require its share of legwork. Start by taking a careful inventory of the information you have in each one. You’ll find data from a wide range of sources, including SAP itself, Salesforce, third-party data, competitive pricing data, UPS, FedEx, and a lot more.

Once you identify which parts you need, it’s time to pull the data with the perfect tool for the job. Matillion is ideal for this data ingestion process as it enables you to easily view the tables you want to connect with and be very selective. This process of connecting to the data and extracting it takes roughly one hour.

Most data used to be pushed downstream. In this case, the information would be grabbed, transformed, and loaded it into a database. Now, with brilliant tools like Matillion, you can grab data and transform it at any point you want through a simple drag-and-drop process. Once data is retrieved from outside sources, it can be landed in the data lake. From there, it’s a simple matter of establishing validation rules and landing it inside Snowflake in the desired data model.

Now’s the time to get as granular as you want. At this point, you can pull customer sales, orders, fulfillment details, net profits, or even part numbers to produce profitability data sets. Once this is done, Snowflake will hypothetically have the data and will be able to prepare it for public consumption.

Alternatively, the data can be released in a raw format and turned over to a data science group, which will then use machine learning to sort through your unfettered information. This method has its benefits, as the raw data will be untouched by transformation or business logic.

Whether you keep your data in-house or send the raw version out for processing, the final step in ensuring a smooth transition is to validate that SAP data has landed correctly in Snowflake. The fun part starts now, as you can begin to visualize and report on data using your business intelligence tool of choice.

As simple as the above steps might seem on paper, they amount to quite a data journey. Wavicle aims to be by your side for the entirety of it, working towards your organization’s cloud independence. Throughout your data transformation, Wavicle takes on much of the leg work, including:

All of this is done in accordance with your organization’s unique security practices—Snowflake’s security model can be seamlessly aligned with your unique structure. And of course, our processes comply with all known regulatory agencies.

Data is only as valuable as our ability to comprehend it. And as legacy systems rise and fall, the rush to migrate information to the next up-and-coming platform can feel increasingly urgent. That’s why Matillion might just be your new best friend and partner in data transformation—as long as you keep our 5 easy steps in mind along the way.

Need an experienced partner to help migrate your data from legacy systems? Reach out to Wavicle’s data migration consulting team today.