If your data lakes have grown to the extent that analytics performance is suffering, you’re not alone. The very nature of data lakes, which allows organizations to quickly ingest raw, granular data for deep analytics and exploration, can stand in the way of fast, accurate insights.

Data lakes remain a go-to repository for storing all types of structured and unstructured, historical and transactional data. But with the high volume of data that is added every day, it is very easy to lose control of indexing and cataloging the contents of the data lake.

The data becomes unreliable, inconsistent, and generally hard to find. This has several effects on the business, ranging from poor decisions based on delayed or incomplete data to an inability to meet compliance mandates.

Databricks has designed a new solution to restore reliability to data lakes: Delta Lake. Based on a webinar Wavicle delivered with Databricks and Talend, this article will explore the challenges that data lakes present to organizations and explain how Delta Lake can help.

Data lakes were designed to solve the problem of data silos, providing access to a single repository of any type of data from any source. Yet most organizations find it impossible to keep up with the extreme growth of data lakes.

The term “data swamp” has been used to define data lakes that have no curation or data lifecycle management and little to no contextual metadata or data governance. Due to the way it is stored, data has become hard to use or unusable.

Users may start to complain that analytics are slow, data is unreliable or inconsistent, or it simply takes too long to find the data they’re looking for. These performance and reliability issues are caused by a variety of issues, including:

Databricks has created Delta Lake, which solves many of these challenges and restores reliability to data lakes with minimal changes to data architecture. Databricks defines Delta Lake as an open-source “storage layer that sits on top of data lakes to ensure reliable data sources for machine learning and other data science-driven pursuits.” Several features of Delta Lake enable users to query large volumes of data for accurate, reliable analytics. These include ACID compliance, time travel (data versioning), unified batch and streaming processing, scalable storage, metadata, and schema check and validation. Addressing the major challenges of data lakes, Delta Lake delivers:

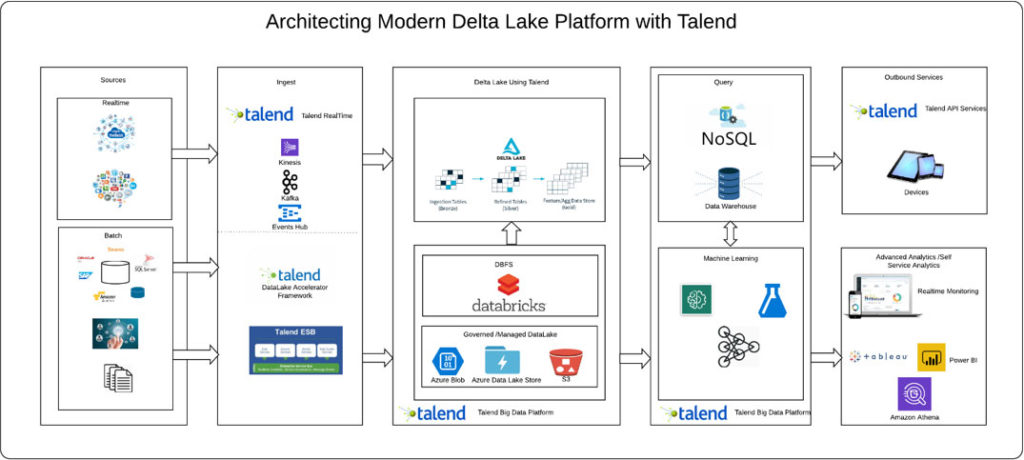

Below is a sample architecture of a Delta Lake platform. In this example, we’ve shown the data lake on the Microsoft Azure cloud platform using Azure Blob for storage and an analytics layer consisting of Azure Data Lake Analytics and HDInsight. An alternative would be to use Azure Blob storage with no compute attached to it.

Alternatively, in an Amazon Web Service environment, the data lake can be built based on Amazon S3 with all other analytical services sitting on top of S3.

In this example, Talend provides data integration. It provides a rich base of built-in connectors as well as MQTT and AMQP to connect to real-time streams, allowing for easy ingestion of real-time, batch, and API data into the data lake environment. Talend has voiced its support of Delta Lake, committing to “natively integrate data from any source to and from Delta Lake.”

Following the architecture are instructions for converting a data lake to Delta Lake using Talend for data integration.

Below are instructions that highlight how to use Delta Lake through Talend.

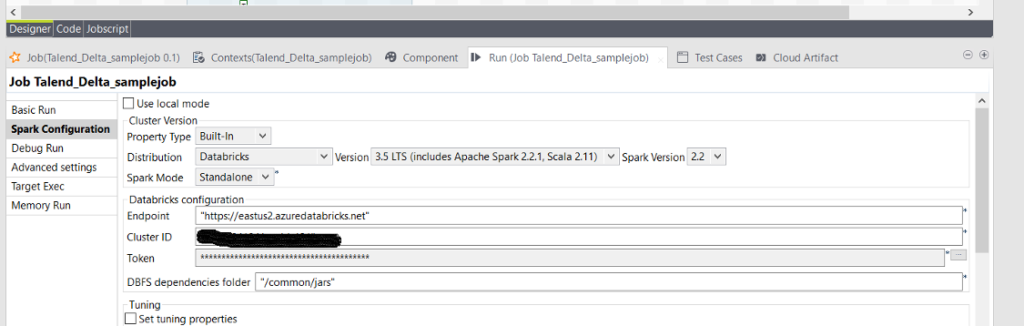

Configuration: Set up the Big Data Batch job with Spark Configuration under the Run tab. Select the distribution to Databricks and the corresponding version. Under the Databricks section update the Databricks Endpoint(it could be Azure or AWS), Cluster Id, Authentication Token.

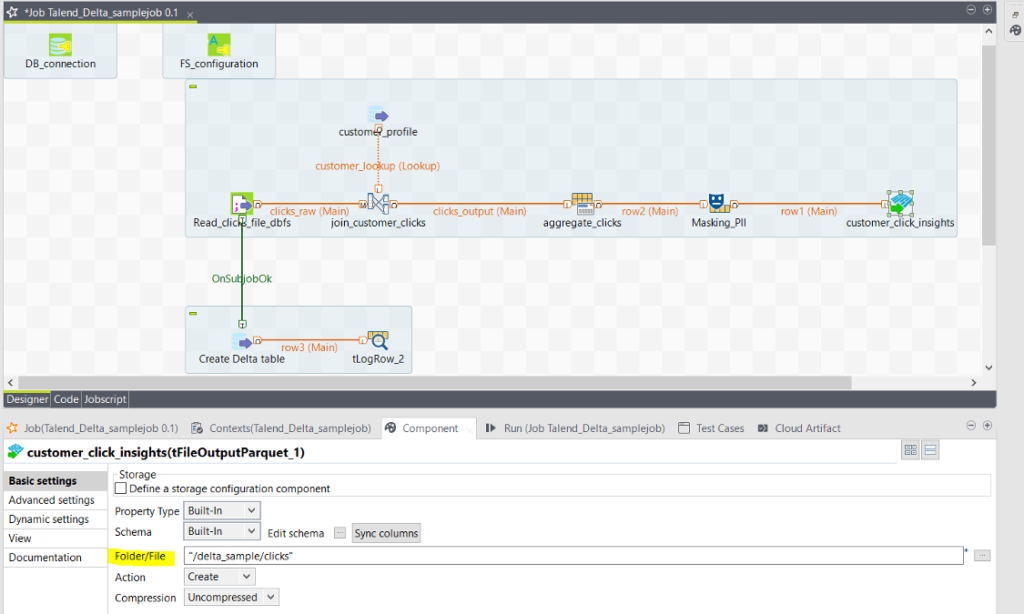

Sample Flow: In this sample job, click events are collected from the mobile app, and events are joined against the customer profile and loaded as a parquet file into DBFS. This DBFS file will be used in the next step for creating a delta table.

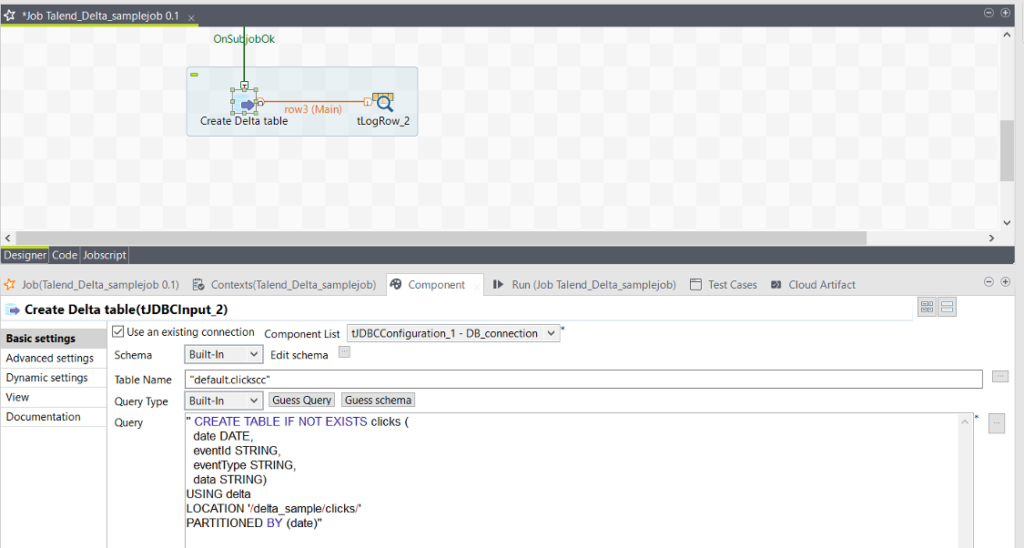

Create Delta Table: Creating a delta table needs the keyword “Using Delta” in the DDL and, in this case, since the file is already in DBFS, the location is specified to fetch the data for Table.

Convert to Delta table: If the source files are in Parquet format, we can use the SQL Convert to Delta statement to convert files in place to create an unmanaged table:

SQL: SQL: CONVERT TO DELTA parquet.`/delta_sample/clicks`

Partition data: Delta Lake supports the partitioning of tables. To speed up queries that have predicates involving the partition columns, partitioning of data can be done.

SQL:

CREATE TABLE clicks (

date DATE,

eventId STRING,

eventType STRING,

data STRING)

USING delta

PARTITIONED BY (date)

Batch upserts: To merge a set of updates and inserts into an existing table, we can use the MERGE INTO statement. For example, the following statement takes a stream of updates and merges it into the clicks table. If a click event is already present with the same eventId, Delta Lake updates the data column using the given expression. When there is no matching event, Delta Lake adds a new row.

SQL:

MERGE INTO clicks

USING updates

ON events.eventId = updates.eventId

WHEN MATCHED THEN

UPDATE SET

events.data = updates.data

WHEN NOT MATCHED

THEN INSERT (date, eventId, data) VALUES (date, eventId, data)

Read Table: All Delta tables can be accessed either by choosing the file location or using the delta table name.

SQL: Either SELECT * FROM delta./delta_sample/clicks or SELECT * FROM clicks

Talend in Data Egress, analytics and machine learning on a high level: