With the advancement of cross-platform Web and mobile development languages, libraries, and tools such as React [1], React Native [2], and Expo [3], the mobile app finds more and more applications in machine learning and other domains. The releases of TensorFlow.js for React Native [4] and TensorFlow Lite [5] allow us to train new machine learning and deep learning models or use pre-trained models for prediction and other machine learning purposes directly on cross-platform mobile devices.

Recently I published two articles [6][7] to demonstrate how to use Expo [3], React [1], and React Native [2] to develop multi-page mobile applications. In each article, I show how I leveraged TensorFlow.js for React Native [4] and pre-trained convolutional neural network models MobileNet and COCO-SSD for image classification and object detection on mobile devices.

As described in [6][7], React [1] is a popular JavaScript framework for building Web user interfaces. Reactive Native inherits and extends the component framework (e.g., component, props, state, JSX) of React to support the development of native Android and iOS applications using pre-built native components such as View, Text, and TouchableOpacity.

The native code in mobile platform-specific languages (e.g., Object-C, Swift, Java) is typically developed using Xcode or Android Studio. To simplify mobile app development, Expo [3] provides us with a framework and platform built around React Native and mobile native platforms that allow us to develop, build, and deploy mobile applications on iOS, Android, and web apps using JavaScript/TypeScript. As a result, you can use any text editor tool for coding.

In this article, similarly to [6][7], I develop a multi-page mobile application to demonstrate how to use TensorFlow.js [5] and a pre-trained deep natural language processing model MobileBERT [8][9][10], for reading comprehension on mobile devices.

Similarly to [6][7], this mobile application is developed on Mac as follows:

- using Expo to generate a multi-page application template

- installing libraries

- developing mobile application code in React JSX

- compiling and running

Before beginning, you should have the latest node.js installed on your local computer/laptop, such as Mac.

1. Generating project template

To use Expo CLI to generate a new project template automatically, first, you need to install Expo CLI:

npm install expo-cli

Then a new Expo project template can be generated as follows:

expo init qna

cd qna

The project name is qna (i.e., question and answer) in this article.

As described in [6][7], I choose the tabs template of Expo managed workflow to generate several example screens and navigation tabs automatically. The TensorFlow logo image file tfjs.jpg is used in this project, and it needs to be stored in the generated ./asserts/images directory.

2. Installing libraries

The following libraries need to be installed for developing the reading comprehension mobile app:

- @tensorflow/tfjs, that is, TensorFlow.js, an open-source hardware-accelerated JavaScript library for training and deploying machine learning models.

- @tensorflow/tfjs-react-native, a new platform integration, and backend for TensorFlow.js on mobile devices.

- @react-native-community/async-storage, an asynchronous, unencrypted, persistent, key-value storage system for React Native.

- expo-gl, provides a View that acts as an OpenGL ES render target, useful for rendering 2D and 3D graphics.

@tensorflow-models/qna, pre-trained natural language processing model MobileBERT [9][10] that can take a question and a related passage as input and returns an array of most likely answers to the question, their confidences, and locations of the answers in the passage (start and end indices of answers in the passage).

npm install @react-native-community/async-storage @tensorflow/tfjs @tensorflow/tfjs-react-native expo-gl @tensorflow-models/qna

In addition, the react-native-fs (a native filesystem access for react-native) is required by @tensorflow/tfjs-react-native/dist/bundle_resource_io.js:

npm install react-native-fs

The expo-camera (a React component that renders a preview for the device’s either front or back camera) is needed since it is used in @tensorflow/tfjs-react-native/dist/camera/camera_stream.js.

expo install expo-camera

3. Developing reading comprehension mobile application code

As described before, first, I used Expo CLI to generate example screens and navigation tabs automatically. Then I modified the generated screens and added a new Qna (question and answer) screen for reading comprehension. The following are the resulting screens:

- Introduction screen (see Figure 2)

- Reading comprehension (Qna) screen (see Figures 3)

- References screen (see Figure 4)

There are three corresponding tabs at the bottom of the screen for navigation purposes.

This article focuses on the Qna screen class (see [11] for source code) for natural language reading comprehension. The rest of this section discusses the implementation details.

3.1 Preparing TensorFlow and MobileBERT model

The lifecycle method componentDidMount() is used to initialize TensorFlow.js, and load the pre-trained MobileBERT [9][10] model after the user interface of the Qna screen is ready.

async componentDidMount() {

await tf.ready(); // preparing TensorFlow

this.setState({ isTfReady: true});

this.model = await qna.load();

this.setState({ isModelReady: true });

}

3.2 Selecting passage and question

Once the TensorFlow library and the MobileBERT model are ready, the mobile app user can type in a passage and a related question.

For convenience, the passage and question in [10] are reused as the default in this article for demonstration purposes.

Default passage:

Google LLC is an American multinational technology company that specializes in Internet-related services and products, which include online advertising technologies, search engines, cloud computing, software, and hardware. It is considered one of the Big Four technology companies, alongside Amazon, Apple, and Facebook. Google was founded in September 1998 by Larry Page and Sergey Brin while they were Ph.D. students at Stanford University in California. Together they own about 14 percent of its shares and control 56 percent of the stockholder voting power through supervoting stock. They incorporated Google as a California privately held company on September 4, 1998, in California. Google was then reincorporated in Delaware on October 22, 2002. An initial public offering (IPO) took place on August 19, 2004, and Google moved to its headquarters in Mountain View, California, nicknamed the Googleplex. In August 2015, Google announced plans to reorganize its various interests as a conglomerate called Alphabet Inc. Google is Alphabet's leading subsidiary and will continue to be the umbrella company for Alphabet's Internet interests. Sundar Pichai was appointed CEO of Google, replacing Larry Page who became the CEO of Alphabet."

Default question:

Who is the CEO of Google?

3.3 Finding answers to question

Once a passage and a question have been provided on a mobile device, the user can click the “Find Answer” button to call the method findAnswers() for finding possible answers to the given question on the passage.

In this method, the prepared MobileBERT model is called to take the provided passage and question as input and generate a list of possible answers to the question with their probabilities and locations (starting and ending indices of answers in the passage).

findAnswers = async () => {

try {

const question = this.state.default_question;

const passage = this.state.default_passage;

const answers = await this.model.findAnswers(question, passage);

console.log('answers: ');

console.log(answers);

return answers;

} catch (error) {

console.log('Exception Error: ', error)

}

}

3.4 Reporting answers

Once the reading comprehension is done, the method renderAnswer() is called to display the answers on the screen of the mobile device.

renderAnswer = (answer, index) => {

const text = answer.text;

const score = answer.score;

const startIndex = answer.startIndex;

const endIndex = answer.endIndex;

return (

<View style={styles.welcomeContainer}>

<Text key={answer.text} style={styles.text}>

Answer: {text} {', '} Probability: {score} {', '} start: {startIndex} {', '} end: {endIndex}

</Text>

</View>

)

}

4. Compiling and running mobile application

The mobile application in this article consists of a react native application server and one or more mobile clients. A mobile client can be an iOS simulator, Android emulator, iOS devices (e.g., iPhone and iPad), Android devices, or any other compatible simulator. I verified the mobile application server on Mac and mobile clients on both iPhone 6+ and iPad.

4.1 Starting React Native Application Server

As described in [6][7], the mobile app server needs to start before any mobile client can begin to run. Use the following commands to compile and run the react native application server:

npm install

npm start

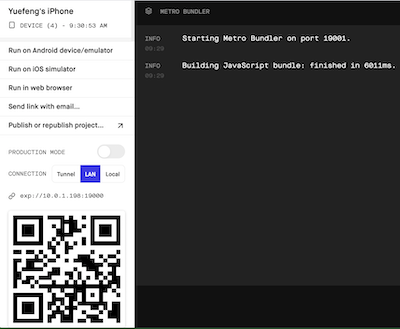

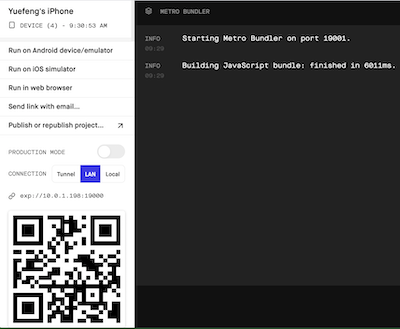

If everything goes through smoothly, a Web interface, as shown in Figure 1, should show up.

Figure 1: Reactive application server.

4.2 Starting mobile clients

Once the mobile app server is running, we can start mobile clients on mobile devices.

Since I use Expo [3] for development in this article, the corresponding Expo client app is needed on mobile devices. The Expo client app for iOS mobile devices is available for free in Apple Store.

Once the Expo client app has been installed on an iOS device, we can use the camera on the mobile device to scan the bar code of the react native application server (see Figure 1) to use the Expo client app to run the mobile application.

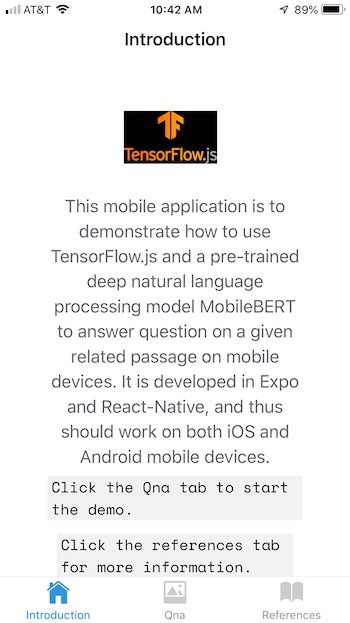

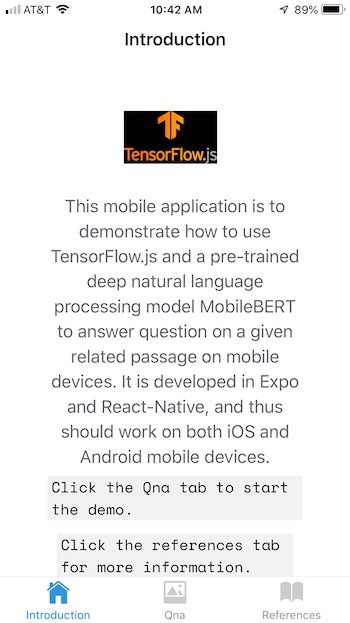

Figure 2 shows the introduction screen of the mobile application on iOS devices (iPhone and iPad).

Figure 2: Introduction screen on iOS devices.

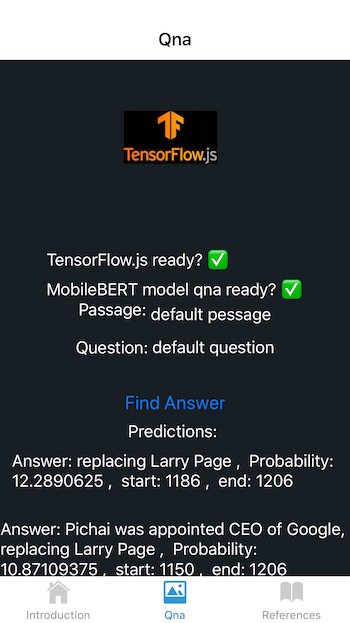

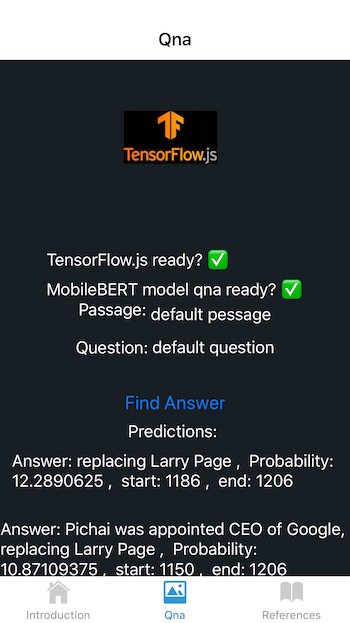

Figure 3 shows the screen of reading comprehension (passage, question, “Find Answer” button, and answers).

Figure 3: Qna screen on iOS devices.

The following are the output of the findAnswers() method call:

Array [

Object {

"endIndex": 1206,

"score": 12.2890625,

"startIndex": 1186,

"text": "replacing Larry Page",

},

Object {

"endIndex": 1206,

"score": 10.87109375,

"startIndex": 1150,

"text": "Pichai was appointed CEO of Google, replacing Larry Page",

},

Object {

"endIndex": 1206,

"score": 9.658203125,

"startIndex": 1196,

"text": "Larry Page",

},

Object {

"endIndex": 1156,

"score": 5.2802734375,

"startIndex": 1150,

"text": "Pichai",

},

]

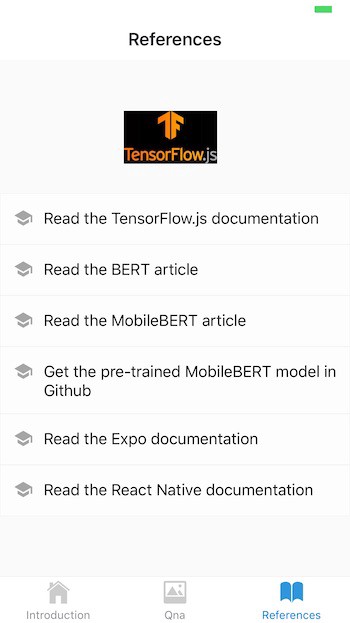

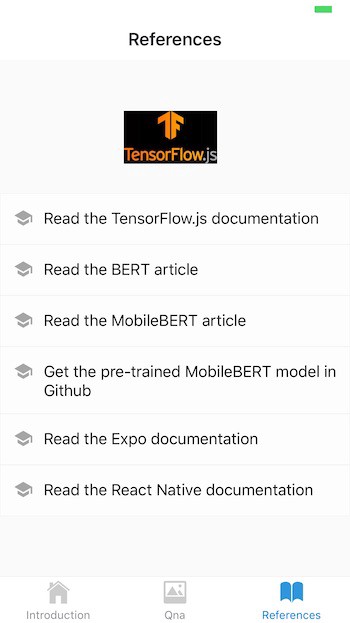

Figure 4 shows the screen of references.

Figure 4: References screen on iOS devices.

5. Summary

Similarly to [6][7], in this article, I developed a multi-page mobile application for reading comprehension (question and answer) on mobile devices using Expo [3], React JSX, React Native [2], TensorFlow.js for React Native [4], and a pre-trained deep natural language processing model MobileBERT [9][10].

I verified the mobile application server on Mac and the mobile application clients on iOS mobile devices (both iPhone and iPad).

As demonstrated in [6][7] and this article, such a mobile app can potentially be used as a template for developing other machine learning and deep learning mobile apps.

The mobile application project files for this article are available on GitHub [11].

References

- React

- React Native

- Expo

- TensorFlow.js for React Native

- TensorFlow Lite

- Y. Zhang, Deep Learning for Image Classification on Mobile Devices

- Y. Zhang, Deep Learning for Detecting Objects in an Image on Mobile Devices

- J. Devlin, M.W. Chang, et al., BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Z. Sun, H. Yu, et al., MobileBERT: a Compact Task-Agnostic BERT for Resource-Limited Devices

- Pre-trained MobileBERT in TensorFlow.js for Question and Answer

- Y. Zhang, Mobile app project files in Github