Did you know there are over 38,000 James Smiths in the U.S.? 38,000!

No wonder manually managing data quality is a losing battle. Whether it’s duplicates, misspellings, or incomplete records, bad data costs companies up to $12.8 million annually.

The fact that bad data costs companies money is no longer news. It’s just the reality we’re all trying to grapple with as both the amount of data we consume and our reliance on it grows.

Augmented data quality technology gains traction

According to Gartner, 60% of organizations will leverage AI-enabled data quality technology for suggestions to reduce manual tasks for data quality improvement by 2022.

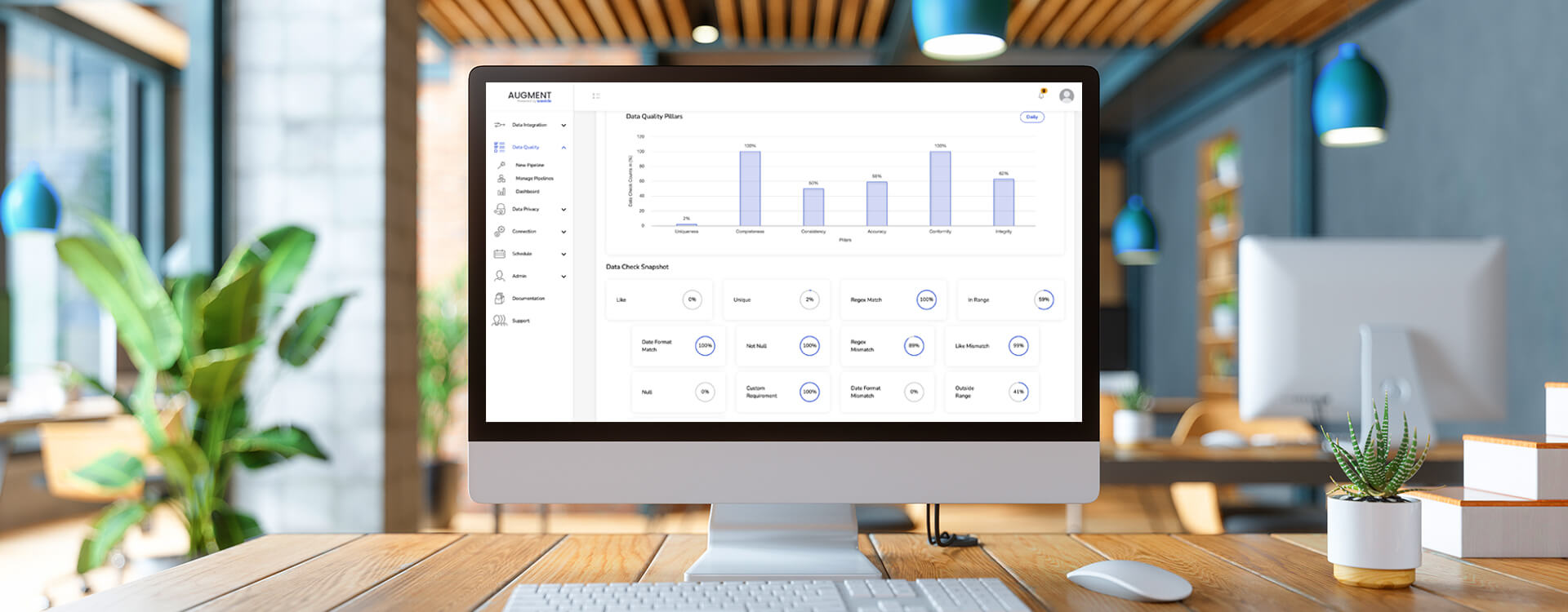

Technology like Wavicle’s AugmentTM uses machine learning to continuously analyze and monitor data quality. With Augment, you can easily identify data quality patterns and analyze the impact data quality has on your business models.

The technology is here today; there’s no need to wait until 2022. Here are 6 data quality checks you can and will want to automate ASAP.

#1 Uniqueness

Thanks to our good friend James Smith, and his fellow 37,999 James Smiths, uniqueness is one task you do not want to tackle alone.

Augment uses SQL rules to automate and look for distinct values. If there is a large percentage of duplicates (like 37,998 other James Smiths), data quality will be poor.

But, say you need to know if the James Smiths that are members of your loyalty program is unique so you can trigger personalized offers. Then, you can use a custom filter to search for James Smith and the loyalty member number. If you still have multiple James Smiths with the same loyalty member number, you have duplicate data that should be cleaned.

Once this filter is set, you can schedule it to run automatically as frequently as you’d like.

#2 Completeness

Completeness flags when essential information is missing. The rule set for completeness searches for null values so you can identify if you are missing any crucial data like loyalty numbers, billing information, or mailing addresses.

#3 Consistency

There are multiple reasons files are inconsistent. Whether due to human error or data that’s gone bad, it’s very common for companies to struggle with data accuracy, especially as they continue to ingest more and more data.

Let’s say you’re an insurance provider that needs to send updated privacy information to all Virginia residents following the adoption of the Virginia Consumer Data Protection Act (VCDPA). If a user searches for Virginia but gets no results, the results really should point to VA. Using review/regex patterns, Augment can scan states to see how many states start with a V and are accurate.

#4 Accuracy

Accuracy is based on the percentage of records falling between the upper and lower limit you choose.

For example, if you are a manufacturer and you want to measure first-pass yield (the percentage of completed products that meet specifications/total products produced), you may enter a lower limit of 92% and an upper limit of 98%.

If your first pass yield falls outside of that range on a single day, equaling 85%, it is an outlier and impacts data accuracy.

#5 Conformity

Conformity means that data is in the form or structure needed. For example, a date follows a MM/DD/YY standard vs. DD/MM/YY or MM/DD/YYYY. If you are a global manufacturer and you have an order for supplies set to be delivered on 05/06/21, you’d better know if you will receive your shipment in May or June.

Using Augment, you can search for data conformity simply by entering the date format you want to search. The tool takes care of the SQL rules and will identify any data that does not match your requirements. Over time, machine learning can be paired with these rules to predict patterns.

#6 Integrity

Data integrity refers to the characteristics that determine the reliability of your information. It is based on parameters such as the accuracy and consistency of the data over time. Measures for data integrity include the percentage of records that are categorized as In Range, Like / Regex pattern, and Not null.

The In Range measure is for numerical columns and the Like / Regex pattern is for text columns so they both can’t be applied to the same column. However, not null and In Range or Like / Regex patterns can be combined.

What does that look like? Let’s go back to our Virginia example. Say we have 100 records and 90 include a state’s name. Out of those 90, 40 states start with ‘V.’ That means our Like pattern is 40 percent and not null is 90 percent. Overall integrity is an average of the two percentages – 65 percent (40 percent of like) + (90 percent of not null) / 2 (no of data quality checks))

Schedule your data quality checks and let Augment do the work

With Augment, Wavicle’s unified data management platform, you can set all of these data quality checks once and schedule them to run at regular intervals of your choice. The results are populated in two dashboards – one for technical users and one for business users.

Using these dashboards, you will always have a high-level understanding of your data quality and alerts when you need to check if there are quality issues. It’s that simple.

Learn More About Augment’s Data Quality Module